Multi-agent AI workflows (technical deep dive)

Architecture principles

A multi-agent workflow is only valuable in high-stakes decisions if it is traceable, eval-driven, and designed for human review. This page summarizes the key technical patterns we used.

Table of Contents

What this page covers

This deep dive focuses on the technical delivery pattern that made the workflow production-grade:

- Decomposing investment analysis into smaller, auditable tasks

- Using strict schemas and structured evidence capture

- Running evals (rubrics + LLM-as-a-judge) to validate quality

- Building for human review (HITL), traceability, and governance

Key patterns

1) Atomic agents with strict contracts

Rather than one monolithic “do the whole thing” prompt, we used multiple agents with narrow responsibilities and strict I/O schemas.

2) Evidence-first synthesis

Every claim in the output should map back to an evidence object (source, excerpt, timestamp, etc.).

3) Eval-driven iteration

We used evaluation loops to compare outputs against rubrics co-developed with the investment team.

4) Human-in-the-loop by default

Review gates are part of the design, not an afterthought.

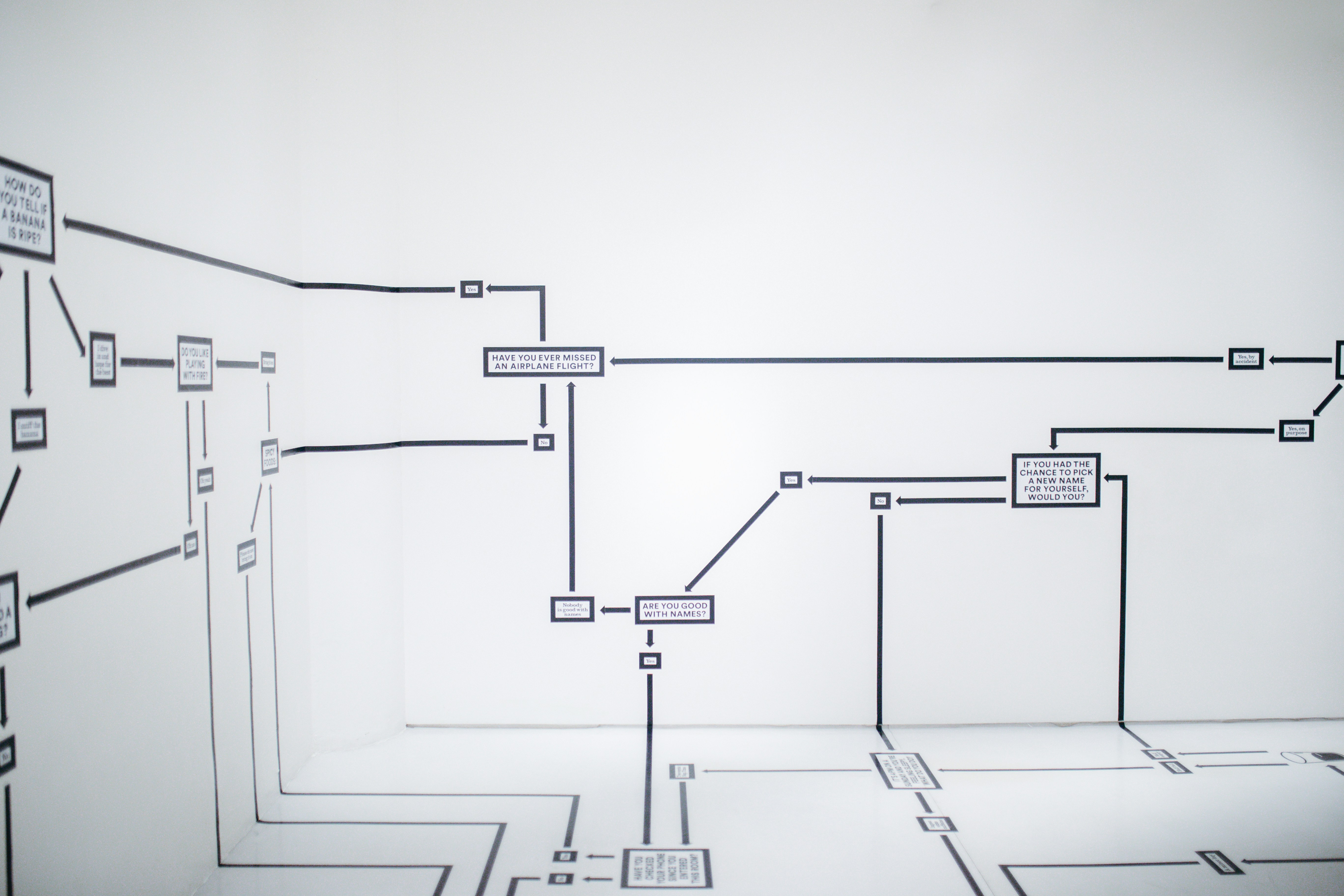

Explore the decision mapping deep dive

Related case studies

Enabling a venture studio to accelerate capital allocations

Challenge: Capital allocation decisions were slow, inconsistent, and difficult to audit.

Solution: A standardized decision workflow with clear inputs/outputs, scoring, and review gates.

Read case study